ReadNext

A personalized book discovery experience designed to help readers find their next great read—faster, easier, and with more joy. ReadNext reduces decision fatigue by combining intuitive browsing, meaningful filters, and smart recommendations tailored to each reader’s preferences.

UI & UX

Project Overview

Project Type: Class Project

Industry: Reading & Learning Technology

Timeline: Jan 2025 - Apr 2025

My Role: UI/UX Designer, Researcher

Tools: Motiff AI, ChatGPT, Julius AI, Dovetail

Target Audience: Avid Readers (2+ books/month)

The Problem & User Insights

Readers struggle with decision fatigue and lack of trust when choosing their next book. Existing platforms prioritize commerce and generic bestsellers, often leading to a mismatch between the reader's current mood and the algorithmic suggestions.

Key Insights from 5 User Interviews:

Discovery is Personal: Users want context-aware recommendations for their current mood, not just similarity to past reads.

Lack of Trust: Participants consistently ignored generic "top charts" and craved personal curation from friends or themed community lists.

Choice Overload: Too much visual clutter and information caused users to simply stop scrolling, leading to decision paralysis.

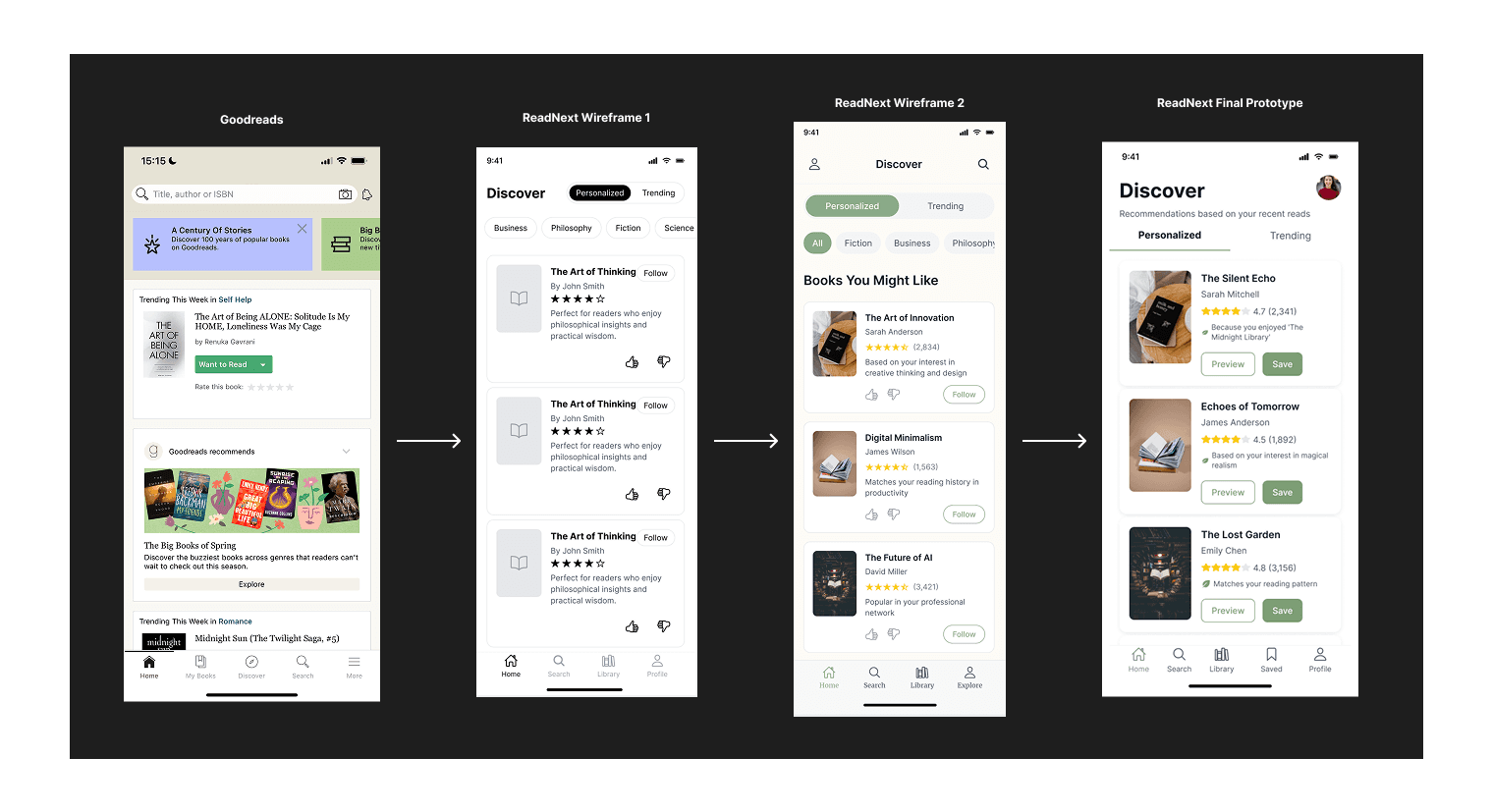

Design Solution: A Trust-Focused Discovery System

ReadNext reimagines the book selection experience by creating a calm, intuitive mobile space built on clarity, relevance, and trust.

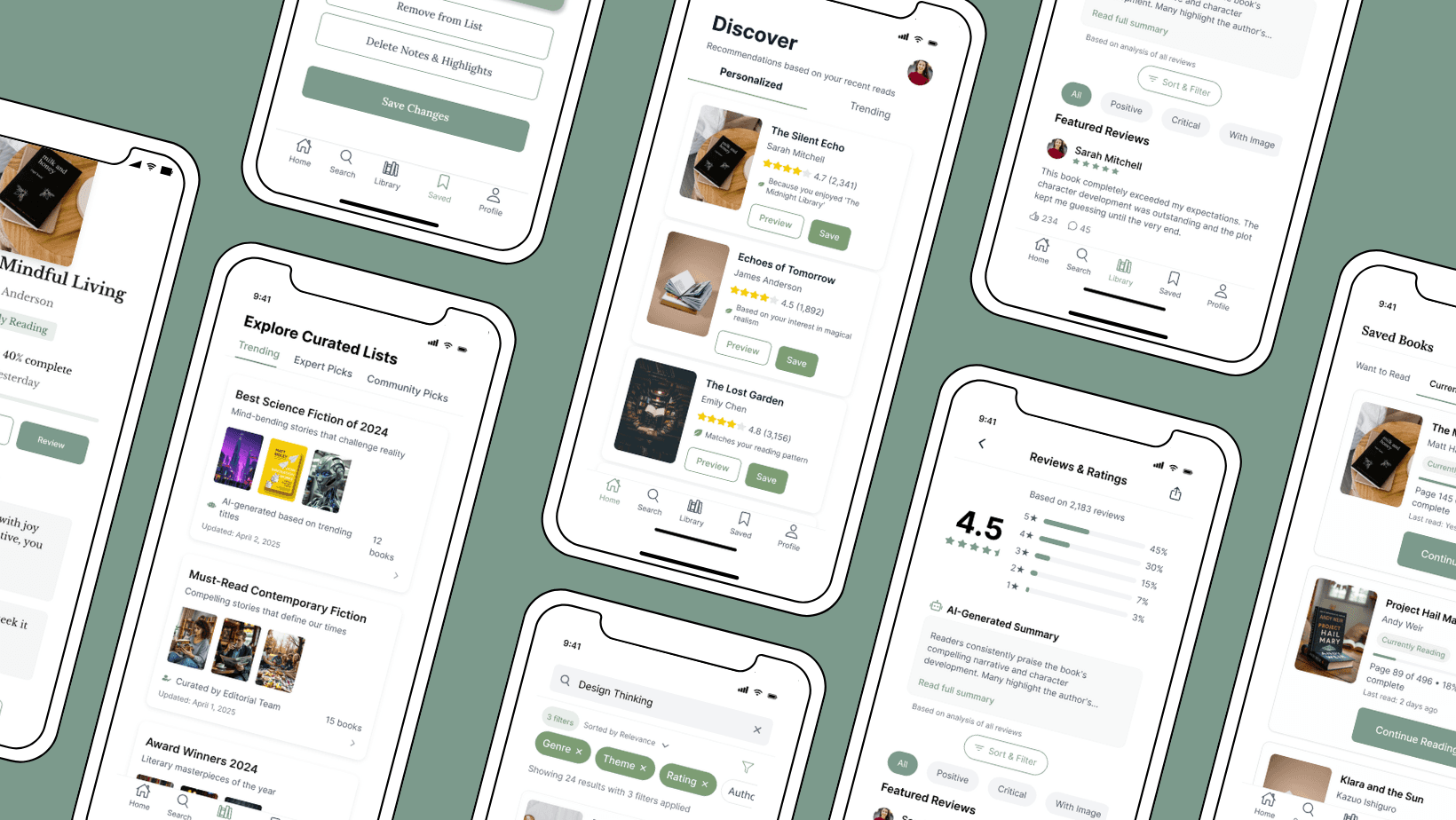

User Task | Design Solution | Impact/Validation |

|---|---|---|

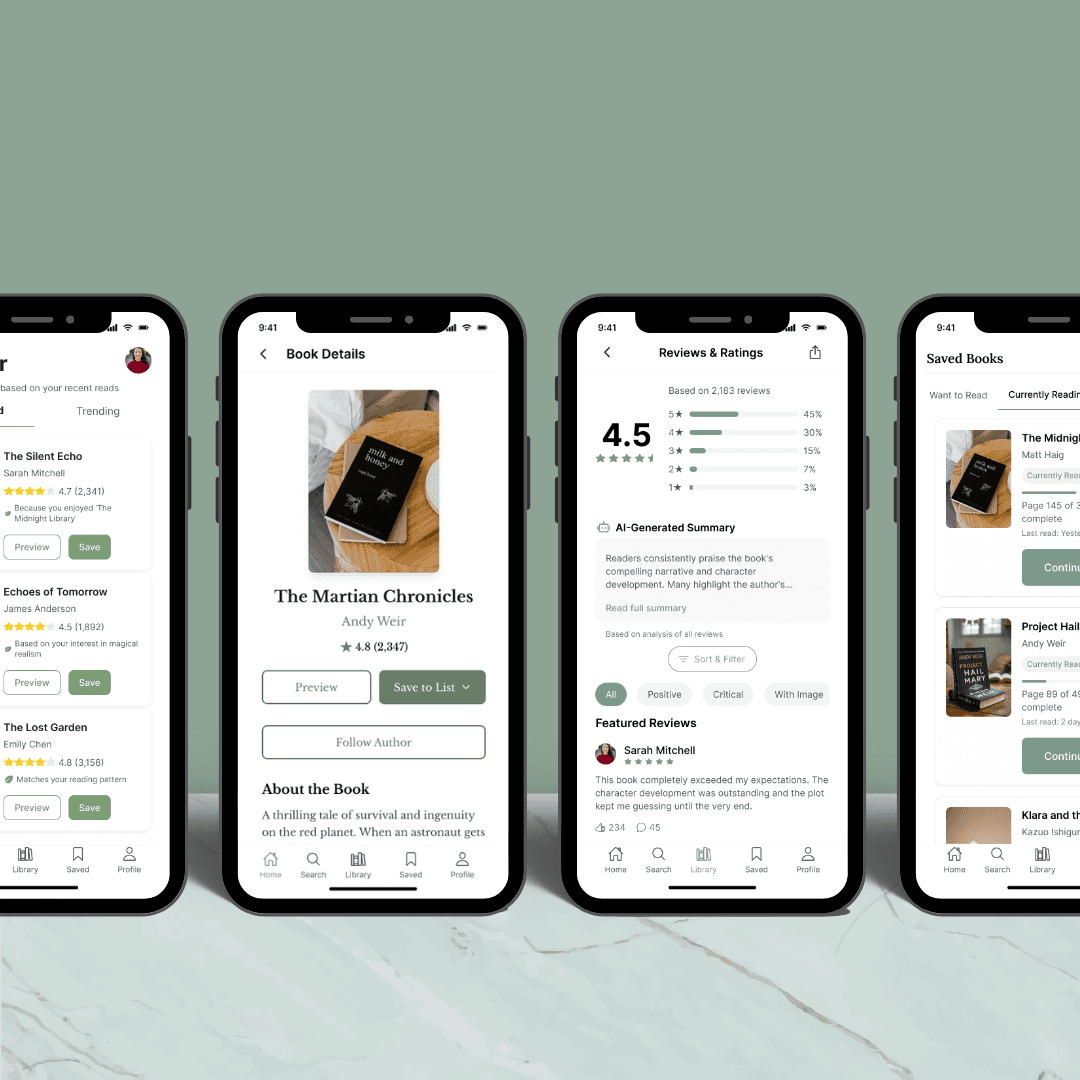

Discover New Reads | Introduced "Personalized" and "Trending" tabs with tags and clear explanations (e.g., "Because you enjoyed...") | Test participants felt the app was "curated—like someone with good taste" |

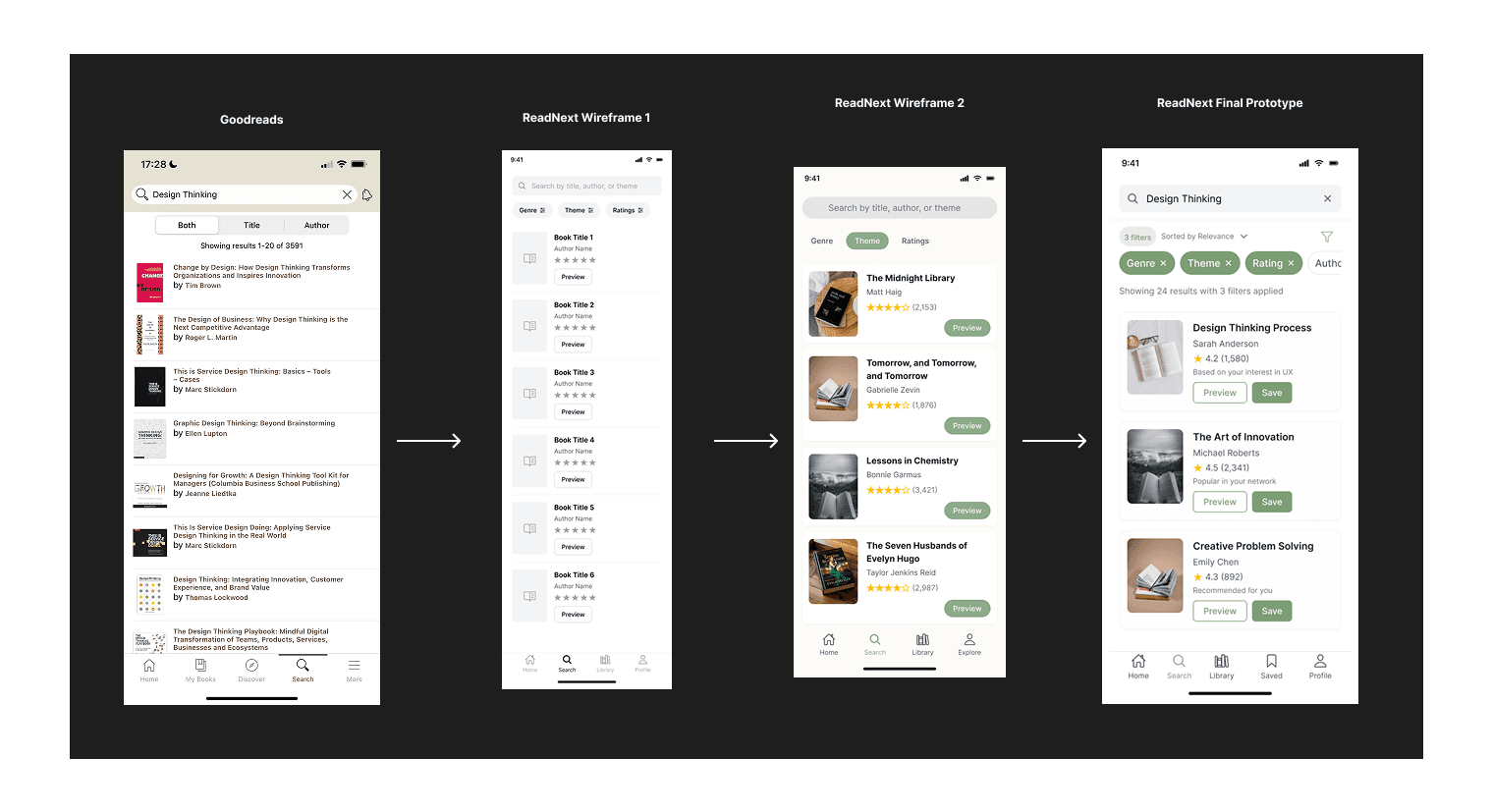

Search & Filter Results | Switched to soft filter chips (Genre, Theme, Rating) and preview-first cards to reduce visual density | Users felt the search was "more tailored than just a title dump" due to micro-explanations |

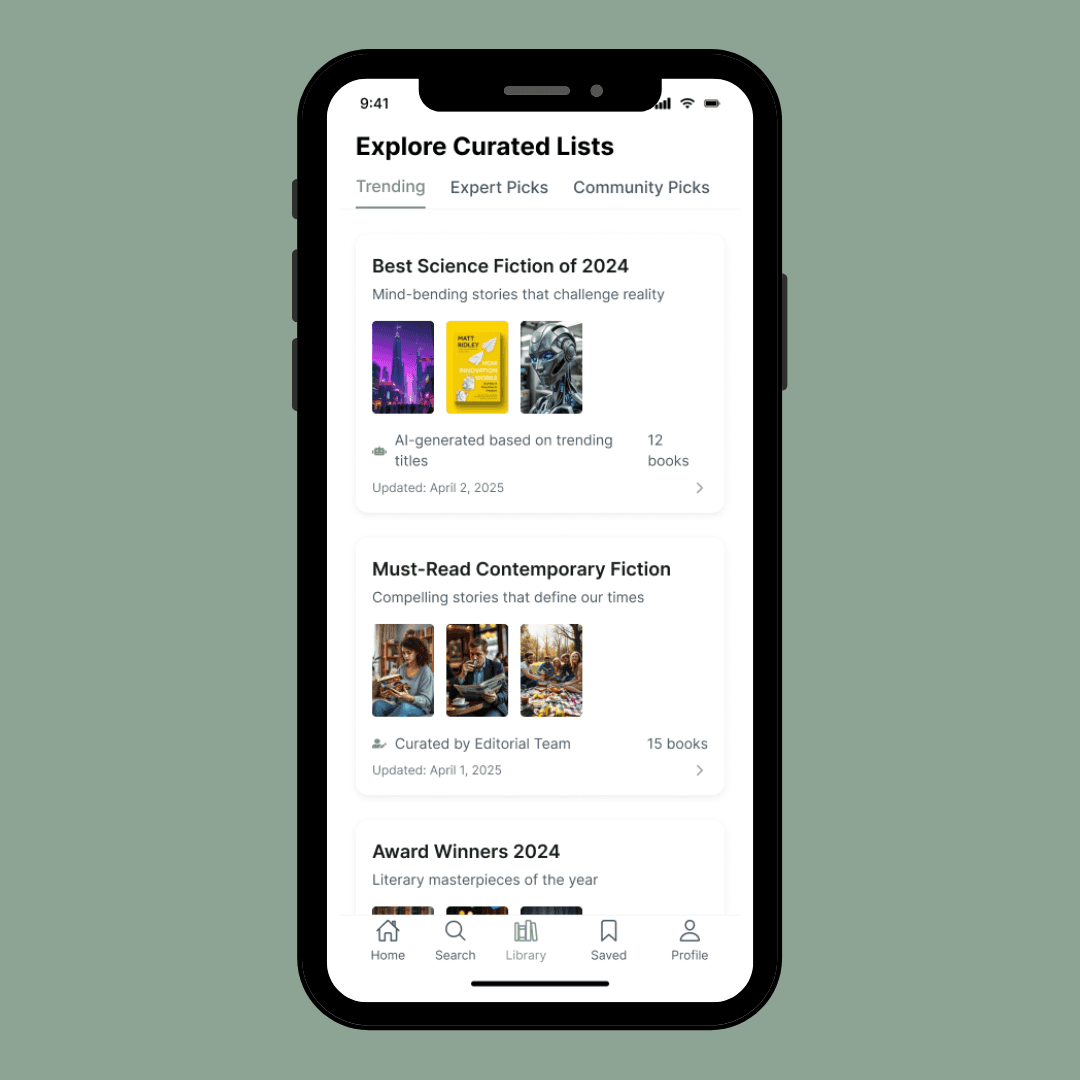

Explore Curated List | Created a separate section for Curated Collections (Trending, Expert, Community) with clear list descriptions and source transparency. | Users said, "Now I can tell where these lists are coming from. It actually makes me want to click into them". |

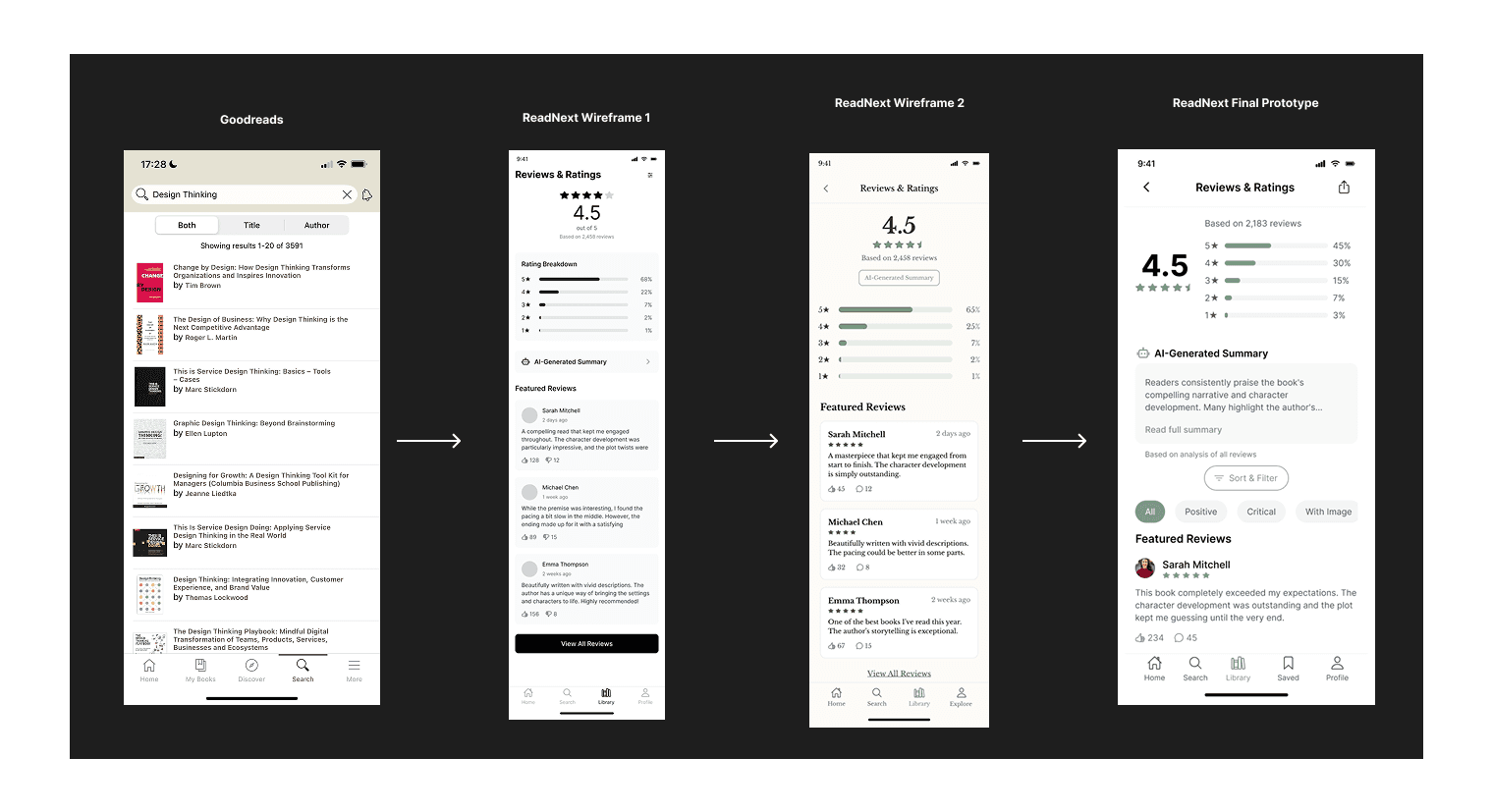

Reviews & Ratings | Combined an AI-generated summary of all reviews with percentage-based rating bars for quick scanning | "This actually makes me want to read the reviews — the summary gives me a clear picture first" |

Save & Track | Reimagined saving as a seamless experience with automatic categorization (Want to Read, Currently Reading) and built-in progress tracking | "I’d actually use this. I don’t have to think about organizing anything — it just works" |

Usability Testing & Impact

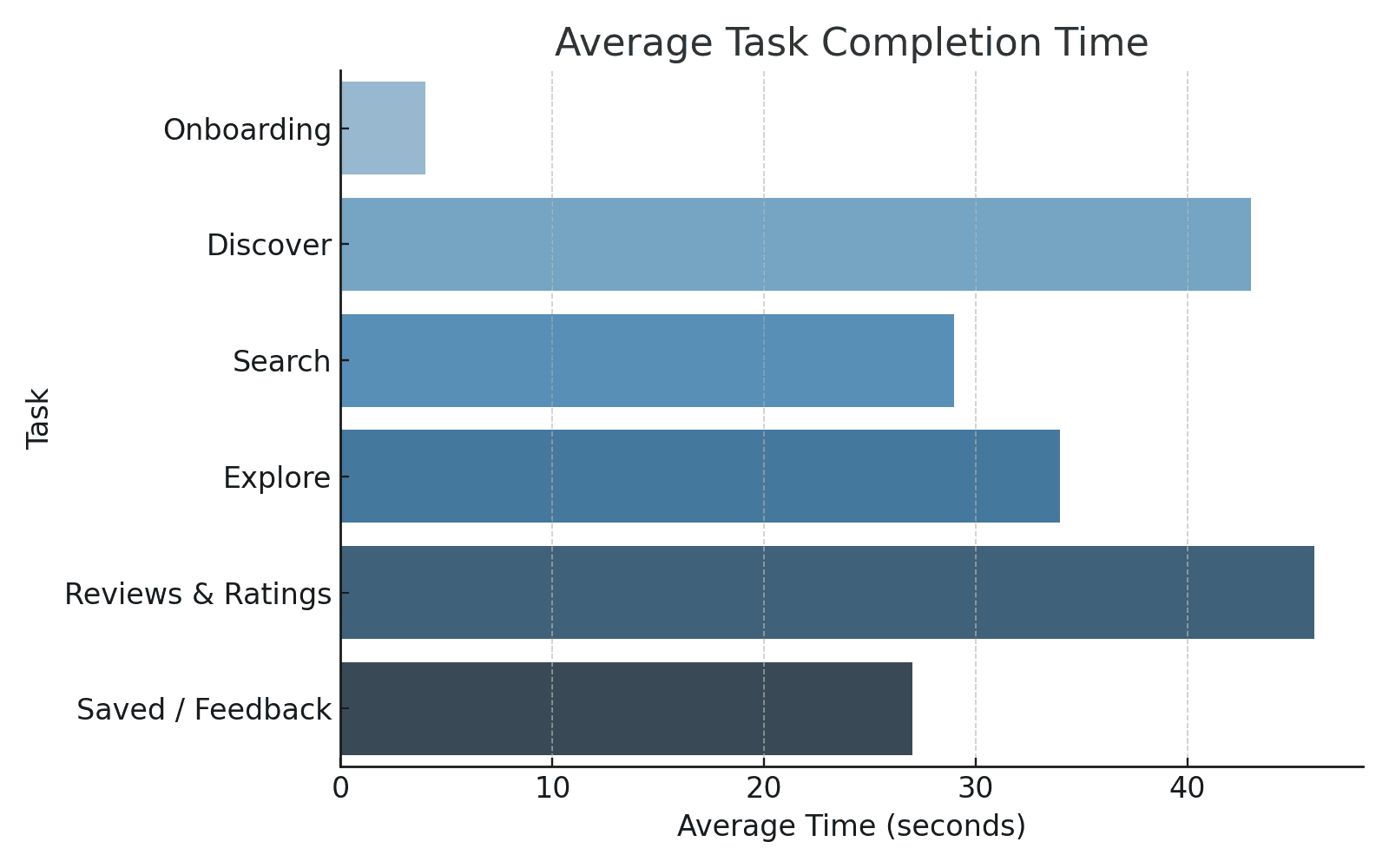

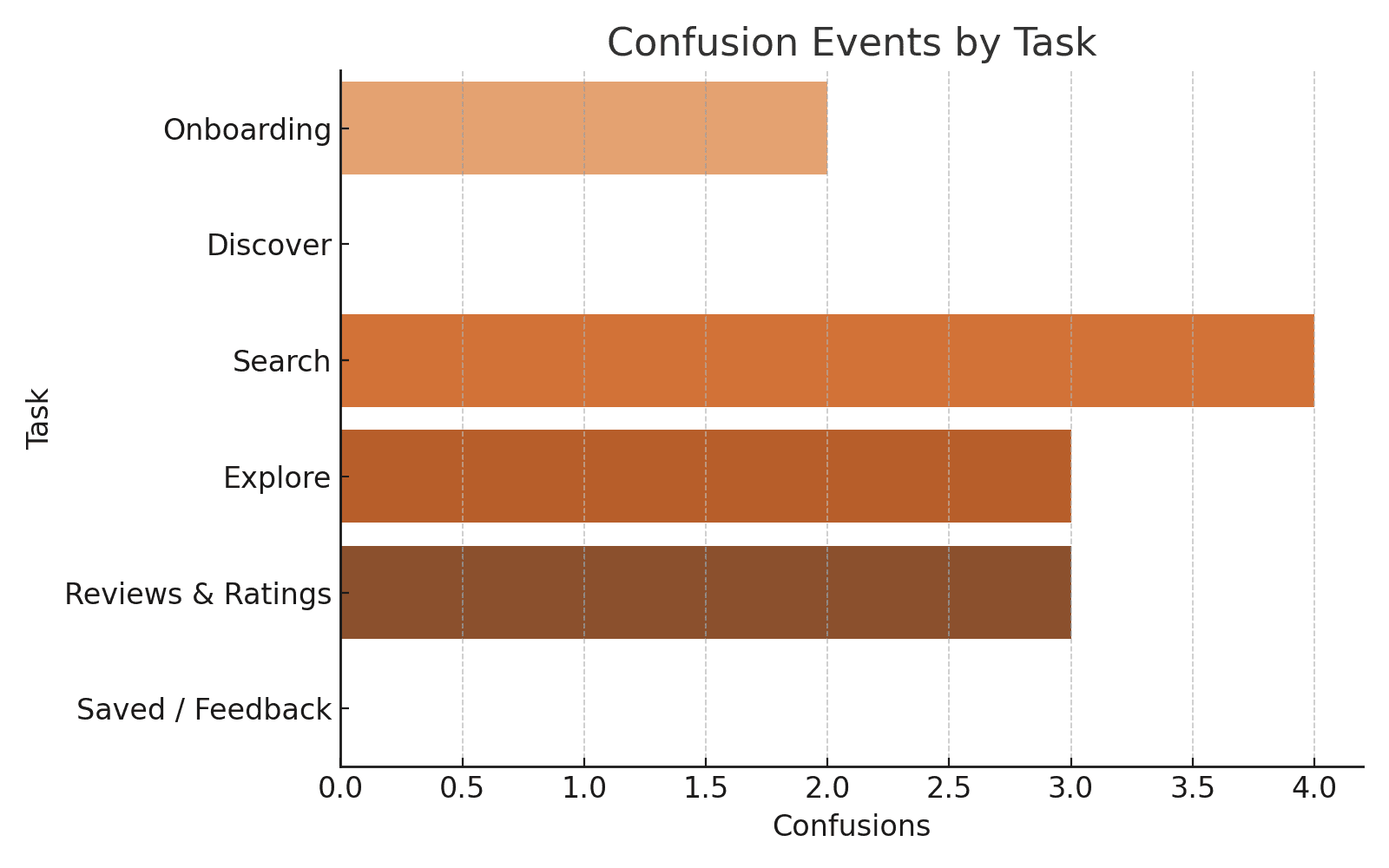

Methodology: Moderated usability tests were conducted with 5 avid readers across 6 core tasks, measuring task success, time, errors, and satisfaction.

Key Design Insights:

Scannability: Tasks like Discover and Explore took the longest, leading to interface refinements for clearer hierarchy and scannable layouts.

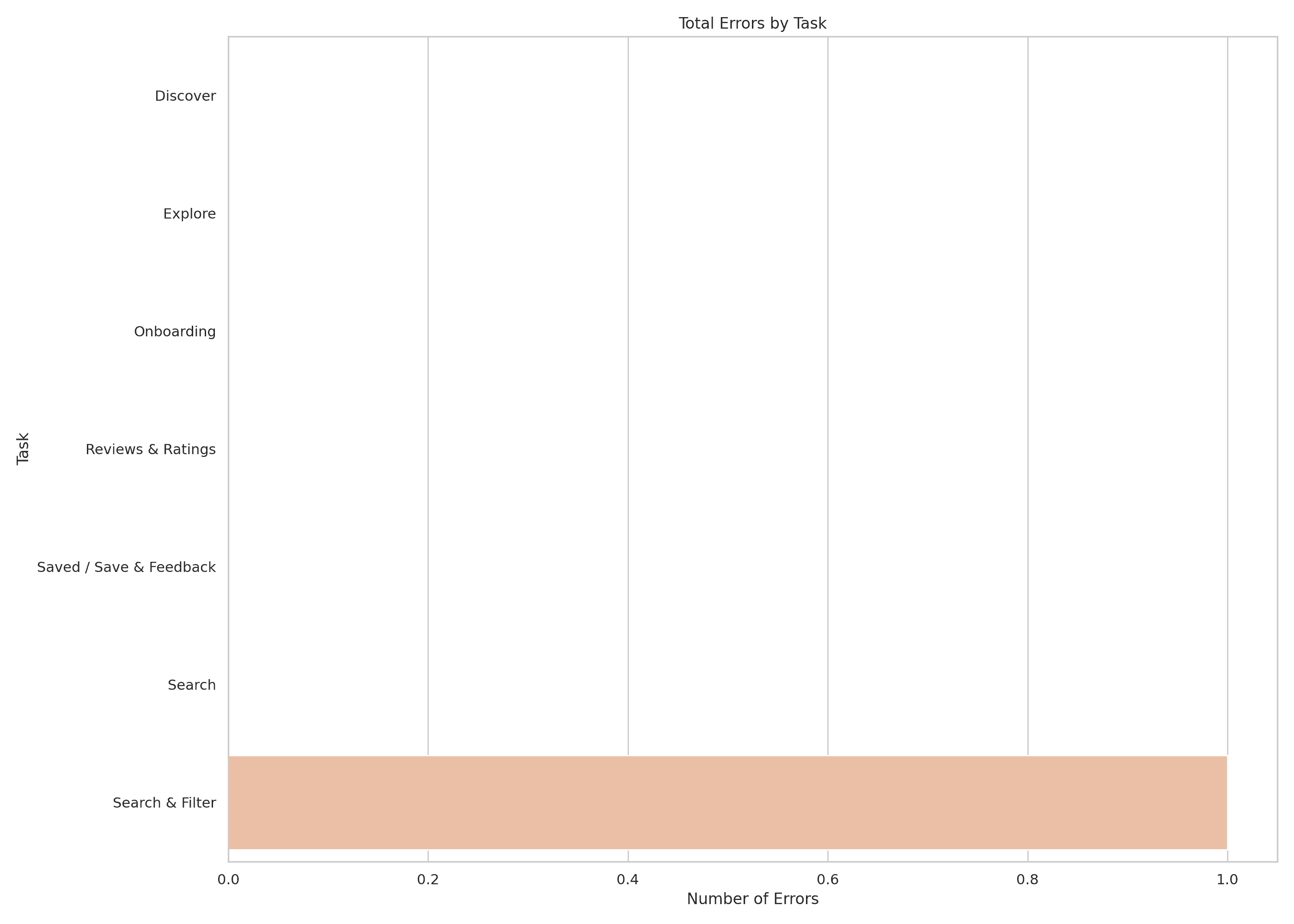

Filter Clarity: The only direct errors occurred during the Search & Filter task, which prompted a redesign of the chip-style filters for better visual clarity and user comprehension.

Reducing Confusion: Search, Reviews & Ratings, and Explore caused the most hesitation; these flows were immediately refined based on testing feedback.

Outcome: Testing ensured every feature was grounded in user behavior. The refinements directly addressed decision fatigue and clarity, resulting in a significantly more intuitive and trustworthy discovery experience.

AI Tools & Modern Workflow

Tool | Key Application | Impact on Workflow |

|---|---|---|

Motiff AI | Generated initial UI structures and visual flows for Search, Recommendation Cards, and Navigation. | Accelerated wireframing, giving a visual jumpstart for early testing |

Dovetail | Organized and transcribed all 1-on-1 user interviews. | Enabled rapid pattern identification and tagging of key pain points (e.g., trust in community, decision fatigue). |

Juluis AI | Analyzed raw usability data (completion time, errors, confusion rates). | Visualized insights through charts and performance scores, enabling data-backed iteration decisions. |

ChatGPT | Assisted with drafting thoughtful interview protocols and writing tone-aligned microcopy for the UI. | Acted as a sounding board for faster content iteration and flow support |

Key Takeaways & Reflection

Transparency Builds Trust: Clear explanations for why a book was recommended were a crucial trust signal.

Community > Algorithm: Readers relied more heavily on community-curated lists and peer reviews than on generic AI suggestions.

Focus on Gist: Users skimmed or skipped long text; summarized insights and scannable layouts improved engagement.

AI as an Accelerator: Tools like Motiff AI and ChatGPT were used for rapid wireframing, interview protocol drafting, and data analysis, which helped speed up the iteration process.